Accessible Online Meetings for those with hearing impairment

13 August 2020

Frances Harris is trained both as a Speech/Language Therapist and as a Lipspeaker. She worked for 15 years in the Audiology & Hearing Implant service at Addenbrookes hospital in Cambridge, including 10 years within the NF2 multidisciplinary team, which gave her many rich encounters with those living with NF2. Her wide working knowledge of speech and voice, audiology and communication disorder makes her keen to provide communication support for people with hearing loss. Here she talks about the importance of lipreading for patients with NF2 and gives insights on virtual communication for those with hearing impairment.

Recently everyone has been forced to communicate differently – over the phone, over video calls, over email. But what about those with hearing loss or total deafness?

When we talk face to face, we use a whole repertoire of skills to decode what the other person is communicating. The words themselves are actually only a small part of that communication. We use: -

- Body language – posture (which tells us about the formality of the conversation) the level of alertness (we take that as interest level), eye contact (are they engaged?), body movements (what are they indicating?)

- Gestures – these confirm our understanding. They are a short cut, if you like, because we can register these very quickly. Things like nodding, pointing, a thumbs up or an ‘OK’.

- Facial expression / eyes – these convey very intuitively, being another quick link to meaning

- Tone – the tone of voice conveys interest and emotional markers like warmth and empathy.

- Interaction – how quickly people respond or interrupt each other is a marker for interest and level of agreement/disagreement.

- Words and sentences - Words and phrases actually take longer to process than the physical features of language. We have to hear them sufficiently, as well as process their grammatical and contextual meaning.

Online, using a video call, we work with only a fraction of this. Much of the body language is constrained – we can only see a small part of the view. We lose a lot of the spontaneity and naturalness and instead have to focus on the bare bones of the words and sentences. We are left with the dregs, not the rich pickings of a face to face encounter. Processing the information takes longer, even without a hearing or sight impairment. Many able bodied people have remarked that working online has been more effortful than working face to face. It wears us out.

– Clare Goddard, Ambassador & Chair NF2 BioSolutions UK"To support those with hearing loss or total deafness patience is key to any online meeting, allow time for observations to sink in and await responses in your virtual conversation. "

Let’s take the different elements of the communication and think about those in turn.

Strategy is vital. The strategy employed by the Chair of the meeting, by the participants, and by the person with hearing difficulties all play their part. I think we have to be much more conscious of strategy when working online. The Chair of the meeting should have a clear agenda (circulated in advance, or displayed in writing at the beginning) including timed breaks for everyone at about 20-30 minute intervals. For each section, the Chair can be encouraged to give a summary of discussion, to confirm the content/action points agreed. There should be an agreed etiquette for all participants regarding turn taking, and how to contribute. (Some video call platforms have a ‘hand raise’ tool for a participant to flag they wish to speak.) A good Chair will ensure that everyone gets a turn to speak, even those who are naturally more reticent. Allow time for that. It might need the dominant types to consciously hold back at points. The person with hearing loss should be prepared to ask for clarification or confirmation as needed – and have a way of flagging that need to the general meeting or to the Chair privately. Using a private message in the chat panel to the Chair of the meeting, for example, might be a useful approach.

Encourage nonverbal (visual) communication from everyone as much as possible. Up your game in terms of using head nodding, thumbs up, OK signs, waving hi/ good bye, using facial expression while listening, and so on. All of these are quick for us to register, so they take less brain ‘work’ to absorb. This approach engages people more, offers more interaction, and reduces fatigue.

Explain (or refrain from) non-literal comments. Jokes, idioms, sarcasm, irony and so on are a nightmare for those with hearing loss. These forms of speech require the listener to process both the literal meaning and then the intended meaning. For example – ‘don’t hold your breath’ – is used to mean ‘I’m not going to wait for that, I don’t think it will happen’. The words don’t mean that at all, but the context (and maybe also prior experience) tell us the meaning. Someone with hearing loss is already working over-hard to piece together the literal meaning, so don’t add another layer of difficulty! And please don’t use jokes and then toss them away with ‘it’s not important, it’s just a joke’. Explain why people are laughing, include everyone.

Technology can help in lots of ways, so I will expand on that next.

I’ve been talking to colleagues and friends with hearing loss and making observations myself. The features that help are:

- Stable picture – for lipreading, seeing the facial expression, and seeing gestures and signs;

- Stable sound – for listening as well as possible;

- Option to have subtitles (also known as captions); and

- Chat panel – a way to send and receive written messages during the video call

The stability of the internet connection will make a big difference to the video call experience. Get onto a hard wired internet connection if you can. Wireless will often be less stable, which could lead to a juddery picture or sound. Achieving a stable connection will usually mean using a desktop or laptop rather than a tablet or mobile phone. Close other applications to reduce the drain on the processing power of your device.

Subtitles can be provided on automatic speech recognition (ASR) or via a live captioner (Electronic Notetaker) who has joined the meeting and is typing in real time. Either way, the best results are obtained by everyone speaking as clearly as they can, and by good turn taking. Hesitations, rephrasing, and unfinished sentences all make for meaningless subtitles. On the other hand, well formulated and clearly-articulated sentences are a joy to the captioner! Live captioning by an Electronic Notetaker is usually a paid service; many of these language service professionals are now offering their services online. Automated subtitles will usually contain more errors because they use probability and not real human decisions!

Transcribing a meeting: There are some apps which offer to transcribe (write down) what is said. These are based on ASR, using prediction and likelihood to select the most likely meaning from the audio captured. Of course, they make mistakes. But they offer an option when no other subtitling is available. Otter and Live Transcribe are two apps I have tried out. When I am working online on the desktop computer, I have my mobile device nearby, running an Otter transcription alongside. This catches the gist, and indicates when the speaker voice changes. Otter offers 40 minutes live transcribing on the free package, more if you upgrade. You can also upload a Zoom audio recording into your Otter account for transcription after a meeting (up to three transcriptions a month on the free package).

The picture you need. For some platforms it is possible to select (‘pin’) a particular person so that you see them all the time, regardless of who is talking in the video call. This is perfect if you want to see only the BSL interpreter or Lipspeaker, for example. Otherwise it is usually a choice between seeing (nearly) everyone in the meeting (‘gallery’ or ‘grid’ view), or seeing the active speaker (‘speaker view’). Some platforms have a limit on the number of participants that can be shown at once.

Other features like screen sharing, breakout rooms, and the total number of participants will be part of the choice for a given video call platform, but I don’t go into those aspects here.

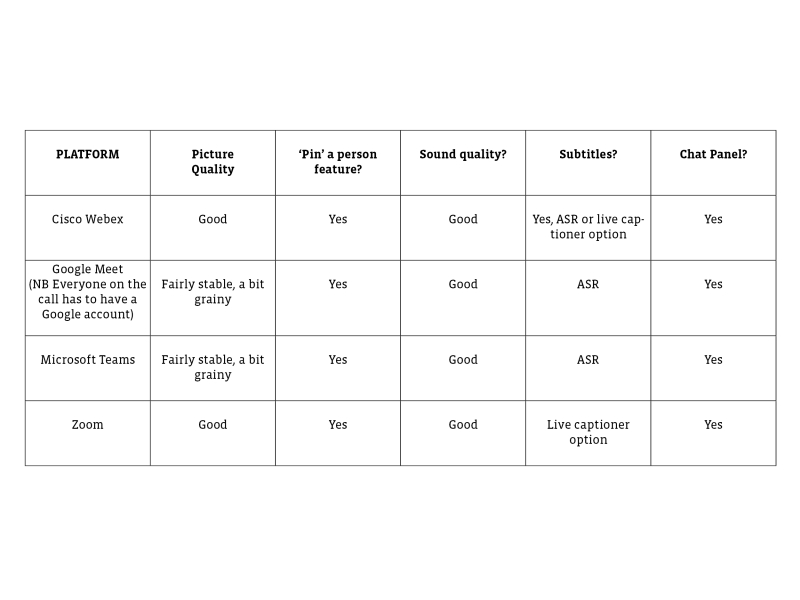

Here is a brief summary of some of my favourite video call options (in alphabetical order):

There are additionally various service providers available that caption speech-to-text called Cart, which delivers live captions remotely to almost any screen – computers, projector screens, tablets or smartphones. CART stands for Communication Access Real-Time Translation. Live captioning provides accessibility and inclusion to people who are deaf or hard of hearing.

The response of the Language Service Professionals (LSP) community to the Covid 19 lockdown has been significant. A good number of freelance BSL interpreters, lipspeakers and notetakers now offer an online service, and several major well known agencies have launched online interpreting/lipspeaking platforms. Some BSL online services are offered on-demand (no advance booking required). Most lipspeakers or notetakers would need advance booking of 2-7 days. Advance booking ensures you have the cover you need at the required time. The language service professional will discuss your needs with you and explain how to link them into your meeting. (There are two terms you might hear: VRI and VRS. The pictures below explain the difference. VRI, Video Remote Interpreting, is when the language service professional is the one being brought into the ‘room’ by a remote connection. For example, the doctor and patient are in a consulting room and the LSP is working from home. Alternatively, if all the parties on a video call are in different places, the set up might be termed Video Relay. The names aren’t that important – what is important is getting the communication support you need in an effective way.)

Useful links and Resources

Guidance document from UCL Deafness Cognition and Language Research Centre DCAL- These are excellent notes for how to set up and run a remote meeting in a way to promote accessibility for the deaf and hearing-impaired.

Webconferencing platforms and their advice on captions (subtitles):

Cisco Webex: Using automatic subtitles or adding a live captioner

Google Meet: Using captions in a live meeting

Microsoft Teams: Using live captions

Filter News

CAR: Social media experiences of young people with visible differences

Young people with visible differences discuss the positive and negative aspects of using social media - find out more

Read More

National NF2 Meeting 2025

On Tuesday 4th November, the NF2 clinical community and national charities gathered in Manchester for the biannual meeting.

Read More

Juliette Buttimore joins our Medical Advisory Board

Juliette Buttimore, Nurse Consultant, specialising in NF2-related schwannomatosis and skull base conditions

Read More

Freddie’s motto: “Be kind, be brave and be happy”

Meet Freddie: diagnosed with NF1 as a baby, he loves performing and has a role in the film Christmas Karma

Read More

Understanding Neurofibromatosis Type 1 (NF1): A surgeon’s thoughts

Christopher Duff is a plastic surgeon working with NF1 patients in Manchester, he answers some common questions from patients

Read More

Understanding NF1: A guide to skin neurofibromas and their treatment

This guide is for people with NF1 and explains what neurofibromas are and what treatments might help.

Read More

Olivia’s NF1 story

Olivia and mam Kelly share their NF1 story, highlighting school achievements and support from NTUK Specialist Nurses

Read More

Revisiting the GCSE class of 2020

Five years on from their GCSEs, we speak to Ella, Thomas and Noah to discover what they have been up to

Read More

_and_a_videophone_DSC0051c1200x900_800_600_s_c1.jpg)